Next-Gen LLMs & Agentic AI: What Every CTO Needs to Know

In this blog you will learn about the latest reasoning LLMs, Agentic AI frameworks, and steps to deploy them.

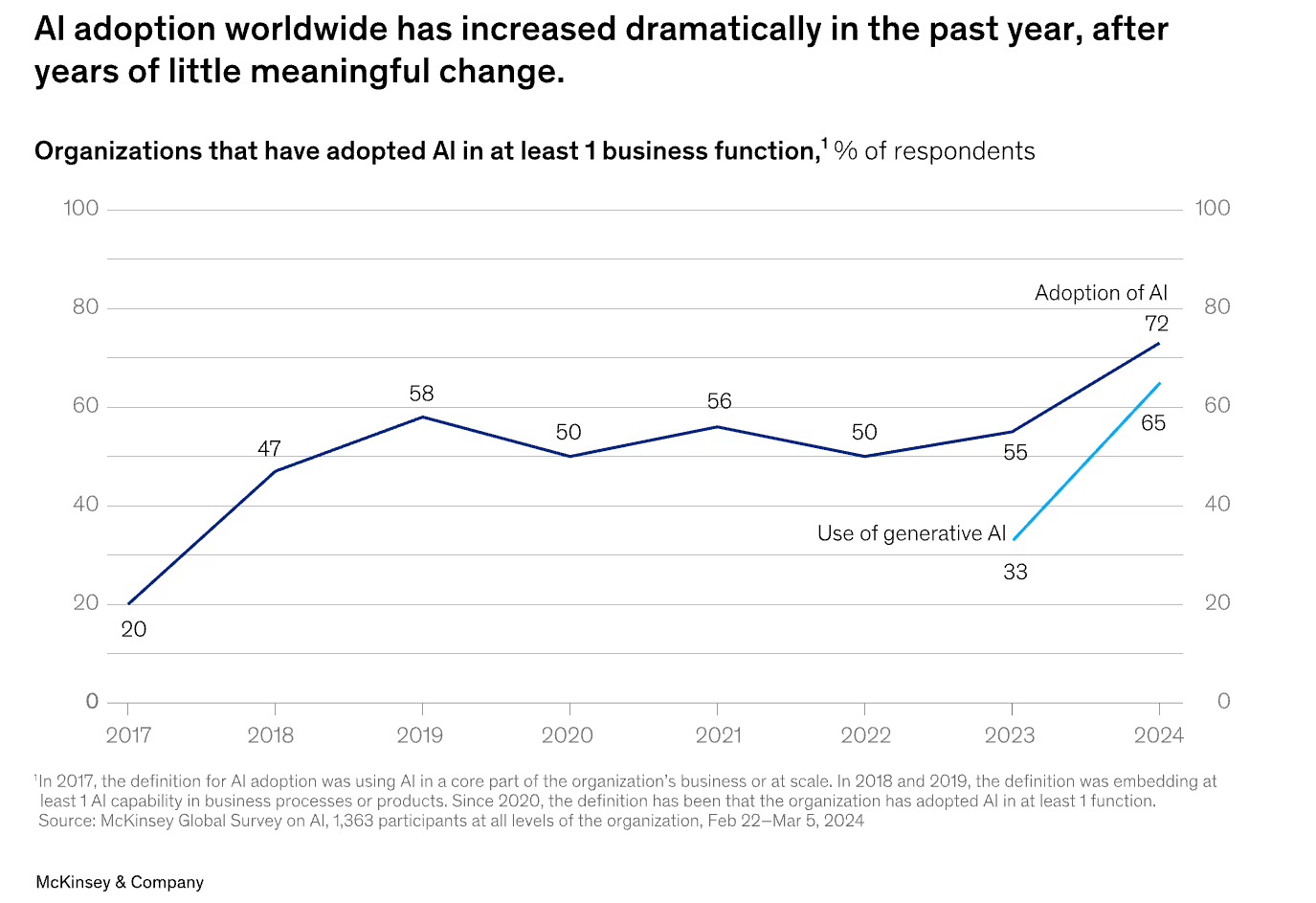

Technology leaders today face a landscape where rapid advancements in large language models (LLMs) and Agentic AI systems are reshaping businesses. Understanding these technologies and their impact on the tech stack, data strategy, and talent requirements is now mission-critical.

Fact of the matter is, these modern systems are becoming increasingly powerful. A 2024 Research from Stanford’s Center for Research on Foundation Models (CRFM) found that leading Agentic AI models (such as OpenAI’s GPT-4o, Google’s Gemini, and Anthropic’s Claude 3 series) can autonomously complete 45-65% of multi-step web-based tasks (e.g., booking travel, managing emails) in controlled benchmarks.

According to a 2024 Gartner survey, about 18% of organizations piloted or deployed Agentic AI systems (such as AI agents for customer service, task automation, or workflow orchestration), up from 8% in 2023. GitHub reported that projects tagged with “AI agents” or “Agentic AI” grew by over 200% year-over-year in the first half of 2024.

If you haven’t started diving into the ensemble of technologies that power this domain, you might be risking the competitive edge your business can gain in the coming years. In this article, you'll gain an insider's view into the next generation of LLMs and Agentic AI and actionable insights for leading your organization's AI transformation. You'll learn about the latest reasoning LLMs, Agentic AI frameworks, and steps to deploy them, so you can plan a roadmap for innovation within your organization.

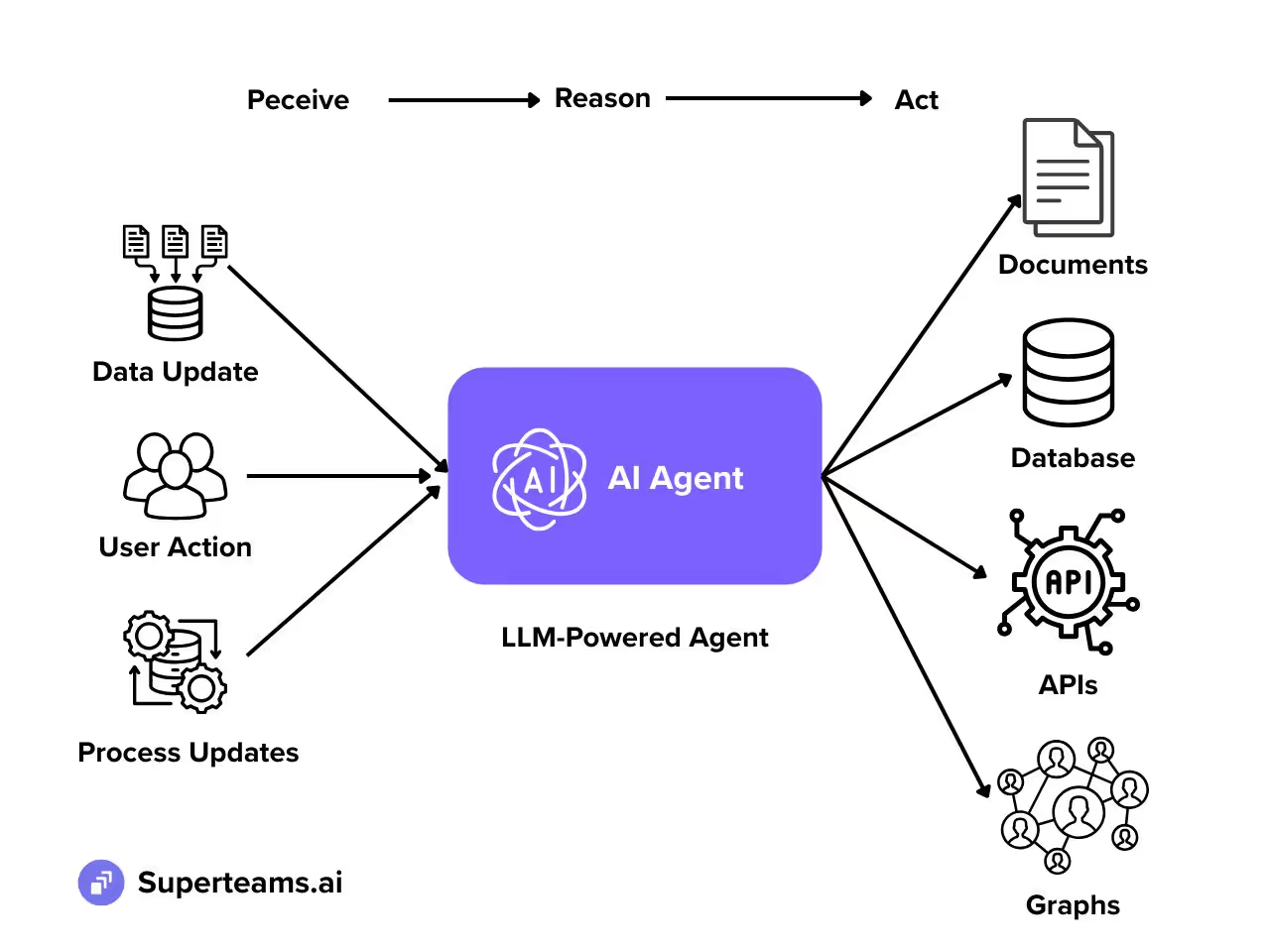

What are AI Agents?

Before we dive in, let's look at shift in workflow that these systems bring to technology architectures.

Agentic AI Agentic AI refers to systems capable of autonomous decision-making, planning, and execution of complex tasks based on high-level goals. Unlike traditional rule-based or supervised models, Agentic AI systems leverage LLMs as their brain and integrated toolchains to reason, adapt, and act dynamically within evolving environments.

While AI Agents have been around since the early days of artificial intelligence, the recent evolution of AI agents is especially powerful because of the LLMs that are behind them. These LLMs now have reasoning, multimodal, and vision capabilities, and this is unlocking a new era of autonomy and adaptability that wasn’t simply achievable the in the past. These modern AI systems are capable of calling tools and can understand and reason over a gamut of data sources.

In the past, you had to write rule engines that worked on specific schemas in your data. With LLM-powered agents, you can simply use the LLM-brain to reason over the underlying schema and adapt your system to work around it.

Unlike what many believe, the true potential of LLM does not come from its ability to process language tasks, but rather its ability to orchestrate tasks, parse complex documents, adapt queries based on a schema, generate code, and eventually, adapt from feedback. This evolution challenges you to rethink the way your organization approaches automation and decision-making.

Next-Gen LLMs: The Brain of Agentic AI Systems

To understand modern AI agents, you must first understand the large language models (LLMs) that power them.

Modern LLMs are not just next-token prediction systems - they can perform multi-step reasoning (o3 or DeepSeek-R1, for instance), can handle code, text, images, video, audio, and a range of different data formats like Cypher (the query language behind Knowledge Graphs), or SQL.

This ability to reason, invoke tools when required, parse documents, and generate JSON-structured outputs fundamentally elevates LLMs from passive responders to adaptive problem-solvers. You can now orchestrate complex workflows, connect LLMs to external systems, receive outputs in precise formats, and enable data-driven automation that is both intelligent and reliable. This unlocks new levels of operational efficiency and innovation for your organization.

Today’s LLMs are not just text generators, they are adaptive reasoning engines that can drive intelligent automation, streamline complex workflows, and act as decision-making co-pilots across functions. For forward-looking organizations, these models offer a strategic lever to gain competitive advantage.

What Modern LLMs Can Actually Do: A Strategic Overview

1. Complex Reasoning and Autonomous Task Execution

Modern LLMs, like GPT-4o and o3, Llama4, Qwen3, Claude 3.7 Sonnet, and DeepSeek, go beyond answering questions. They can break down complex tasks into steps, make intermediate decisions, and simulate planning, making them ideal for business process orchestration and internal tool automation.

Use Case: Financial analysts can delegate exploratory report generation or scenario modeling to an LLM-based assistant that plans, computes, and refines based on live inputs. These agents can be triggered weekly to generate concise reports on their own.

2. Multimodal Intelligence Across Formats

Unlike the older LLM models, the cutting-edge LLMs can process and generate across a spectrum of inputs, text, images, audio, video, charts, spreadsheets, SQL, Cypher (graph queries), and more. You can, therefore, reason over different data modalities, streamline your ELT/ETL pipeline, and enrich or annotate your data.

Use Case: A marketing AI agent could process customer reviews (text), video feedback (video), perform competitive intelligence, and suggest new marketing ideas daily.

3. Enterprise-Grade Code and API Handling

These models understand and write production-level code, invoke APIs, write SQL queries, and even generate infrastructure templates, turning them into full-fledged digital operators within your technical stack.

Use Case: A CTO could deploy LLM-powered tools to automate internal DevOps tasks or triage issues across engineering backlogs. Or, for instance, you could build agents that transform legacy code into modern languages and frameworks.

4. Tool Invocation and Workflow Automation

LLMs can be configured to trigger external tools and systems, like CRM actions, financial models, or internal knowledge bases, based on natural language prompts and decision rules. Google’s ADK and Anthropic’s MCP are designed to streamline tool calling, and numerous frameworks now support tooling.

Use Case: Imagine a customer support AI that doesn’t just reply but also files tickets, pulls account history, updates recommendations, or passes on the ticket to a human agent for refund, all securely and on-demand.

5. Long-Term Memory and Personalization

With context windows now stretching into hundreds of thousands of tokens (e.g., 10 million in Llama4 Scout), these models can handle entire corpora of documents, historical emails, or contracts, supporting deep personalization and traceability.

Use Case: Legal teams could consult an AI assistant with instant recall of past cases, contracts, or clauses, tailored to their firm's style and preferences.

6. Structured, Interoperable Outputs

LLMs can produce structured outputs like JSON, XML, Markdown, or SQL, enabling seamless integration into dashboards, databases, or downstream software, eliminating the need for manual translation.

Use Case: HR systems can ingest candidate summaries, job descriptions, or policy documents directly from LLM output without post-processing.

7. Retrieval-Augmented Generation (RAG) Compatibility

Next-gen models integrate easily with vector databases and document retrieval systems. This ensures their responses are grounded in your proprietary data, not just generic internet knowledge.

Use Case: A healthcare provider’s AI assistant can cite research, internal policies, and patient data, while maintaining compliance and traceability.

8. Natural Language Interfaces for Complex Systems

Modern LLMs are bridging the gap between natural language and structured business logic. They can convert plain-English queries into database calls, graph queries, or filter conditions.

Use Case: A COO can type “show me underperforming regions by revenue this quarter” and get SQL-backed, dashboard-ready results in seconds.

9. Domain-Aware Instruction Following

Unlike legacy chatbots, LLMs can be tightly constrained to formats, tone, and rules—allowing organizations to ensure compliance, accuracy, and brand alignment.

Use Case: Regulated industries like finance or insurance can deploy LLMs that respect legal boundaries and documentation protocols.

10. Agentic Coordination Across Teams and Tools

When orchestrated via platforms like LangGraph, CrewAI, or AutoGen, LLMs can operate as part of larger agentic systems—deciding when to act, delegate, or escalate across multiple tools and roles.

Use Case: Think of a product launch assistant that coordinates design, copy, legal approval, and campaign rollout—across Slack, Jira, and Notion—with minimal human intervention.

Organizations that jump in see productivity gains after a few tries. One example of such automation is Dropbox Dash. In early 2024, Dropbox rolled out “Dropbox Dash,” an Agentic AI system that was designed to help employees find documents, answer questions, and automate repetitive tasks across multiple apps (Gmail, Slack, Google Drive, etc.). According to Dropbox’s Q1 2024 productivity report, early adopters of Dash saved an average of 30–45 minutes per day per employee on searching and organizing information. In internal surveys, 73% of employees reported they could “focus more on high-value work” thanks to Dash handling routine digital tasks and cross-app information gathering. By end of 2025, we expect to hear a number of such reports cutting across industries.

Technical Agentic AI Patterns: Architectures for the Next Decade

The emergence of agentic AI systems introduces a new paradigm for enterprise architecture. We are no longer designing applications where AI is a bolt-on feature; instead, we’re engineering modular systems where LLMs act as autonomous, goal-driven agents, capable of interpreting context, invoking tools, and adapting to feedback in real-time.

Understanding the technical blueprints behind agentic systems is essential, to lead meaningful transformation across the organization. Below are the key agentic AI patterns that are shaping modern AI-first system design.

1. LLM as Orchestrator Pattern

At the heart of most agentic AI systems is a reasoning-centric LLM that interprets goals, decomposes them into actionable tasks, and calls tools or external APIs to complete them.

- Core Concept: The LLM becomes a logic engine, using structured prompts or memory-backed workflows to decide what to do next.

- Used In: AutoGPT, LangChain agents, CrewAI roles, Anthropic MCP flows.

- Risks: Lack of deterministic flow; hard to debug if poorly scoped.

Implementation Tip: Keep task boundaries tight; use metadata-rich messages to route tool calls. Ensure you trace every step of the agent execution.

2. Planning + Execution Separation Pattern

Inspired by traditional AI, this pattern separates the agent’s planner (LLM) from the executor (toolchain or worker process). The LLM maps intent to steps, but another module (possibly another agent) is responsible for doing.

- Used In: AutoGen (Microsoft), LangGraph, Jina AI’s workflow trees.

- Strengths: Enhances traceability and observability; enables reuse of execution pipelines.

Enterprise Value: Useful for regulated industries where you want humans in the loop or staged approvals for AI actions.

3. Function Calling / Tool Use Abstraction Pattern

LLMs like GPT-4o, Claude 3.7, and Gemini 2.5 support native function calling, allowing structured requests to internal tools or APIs. This is the backbone of tool-augmented AI agents. Coding copilots like Windsurf IDE, Lovable, Cursor use this pattern.

- Example: An LLM receives a request, maps it to a tool via function schema, and returns the result within the same conversation.

- Common Tools: Zapier actions, OpenAPI endpoints, internal orchestration APIs.

Pattern Enhancement: Combine with RAG to dynamically populate tool inputs based on recent memory or user history.

4. Externalized Long-Term Memory Pattern

Rather than trying to stuff context into prompts, modern agentic systems use vector databases or knowledge graphs as external memory. The agent retrieves relevant snippets or embeddings per task.

- Tools: PGVector, Qdrant, Weaviate, Neo4j.

- Memory Types: Semantic (vector), symbolic (graph), hybrid (indexed structured knowledge).

Caution: Retrieval logic must be explainable and version-controlled. Invest early in memory hygiene.

5. Schema-Aware Query Pattern

Agents can now interpret database schemas or APIs dynamically using LLMs. Instead of hardcoding data access logic, agents ask: "What does this schema mean?"—then build queries accordingly.

- Ideal For: Internal dashboards, report generation, ad hoc analysis.

- Works With: Postgres + pgvector + pgai, MongoDB, DuckDB, and others.

Use Case: A marketing manager types: “Show me leads from Tier 1 cities who haven’t been contacted in 2 weeks.” The agent infers the query and outputs a valid SQL script.

6. Multi-Agent Collaboration Pattern

Not all agents must be monolithic. In this pattern, specialized agents (think: roles) collaborate via structured protocols—planner, coder, reviewer, devops, analyst—each handling specific steps with domain expertise.

- Orchestration Platforms: CrewAI, LangGraph, MetaGPT, ReAct.

- Benefits: Scalability, modular debugging, natural specialization.

Emerging Trend: Role memory—agents remember and evolve their responsibilities over time, becoming more effective in ongoing tasks.

7. Self-Critique and Feedback Loop Pattern

Agentic systems are moving beyond single-shot responses. With recursive feedback (either from humans or auto-evaluators), agents critique and refine their outputs.

- Patterns Include: Reflexion (self-critique), ReAct (thinking then acting), Constitutional AI (value alignment).

- Application: Compliance checks, content QA, negotiation agents.

Tip: Log every revision and chain of thought. These become future fine-tuning or RAG inputs.

8. Human-in-the-Loop (HITL) Guardrail Pattern

Agentic AI must be controllable. Enterprise deployments use review gates where LLMs ask humans for sign-off before executing sensitive steps.

- Triggers: High-risk actions, low confidence, ambiguous instructions.

- Integration Points: Slack approvals, dashboard sign-offs, audit trails.

Use Case: AI-generated pricing model gets human validation before rollout.

Tip:

Think of these patterns as architectural guides. They help you design intelligent, modular, and scalable AI systems that go beyond demos and truly deliver value in production.

How to Deploy Agentic AI Systems

Designing an intelligent agent is one thing. Deploying it at scale—securely, reliably, and cost-effectively—is quite another. The move from demo-stage to enterprise-grade Agentic AI systems requires robust infrastructure, clear routing logic, and smart architecture choices that ensure observability, scalability, and control.

This section walks you through the technical pillars of deploying Agentic AI systems at scale.

1. Modular System Design: Decompose the Agent

A scalable agent should be decomposed into three modular components:

- Planner (LLM brain): Interprets the user goal, breaks it down into sub-tasks, and decides on tool invocation.

- Executor (Tools/APIs): Executes those tasks—e.g., calling an API, querying a database, or invoking a microservice.

- Memory (Knowledge Layer): Contextualizes decisions with external memory like vector databases or session history.

Why this matters: You can then plan how each component is scaled independently, be versioned, and have fallback mechanisms.

2. Routing Logic: Dynamic Decision-Making Infrastructure

Agentic systems should not call the LLM for every request blindly. Instead, you must implement a routing layer that can:

- Classify user intent (question, action request, summarization, generation)

- Decide the correct pathway (e.g., use RAG, call API directly, escalate to human)

- Minimize unnecessary LLM calls (using heuristics, classifiers, or regex rules)

Recommended Tools: FastAPI, LangGraph/LangChain Router, Semantic Router, or a custom rules engine backed by OpenAI’s function_call JSON schema. Remember, if you need predictability, use structured outputs to guide the LLM response.

3. Scaling LLM Calls: Efficient Resource Management

LLM calls are expensive and can become a bottleneck. You’ll need strategies to manage scale:

- Async Batched Inference: Use batch processing to minimize latency.

- Token Budgeting: Compress or prune memory/context intelligently.

- Multi-model Routing: Route trivial tasks to smaller models (e.g., Mistral, Gemini Flash) and complex tasks to higher-cost models (e.g., Llama4, GPT-4o, Claude Sonnet).

- Caching Layer: Use tools like Redis or Helicone to cache frequent requests/responses.

Example: Use Claude 3.7 for multimodal tasks, GPT-4.1 for complex reasoning, and Mistral-7B for small language tasks, all routed by context.

4. Memory Architecture: Externalize and Organize

Avoid hardcoding memory into prompts. Use:

- Vector DBs (PGVector, Qdrant, Weaviate): For semantic recall of knowledge, long-term memory, and document indexing.

- Metadata Filters: Combine semantic + symbolic filtering for precise context injection.

- Session Stores (Redis, Postgres): Maintain working memory per user/agent session.

Best Practice: Design your memory schema to mirror business workflows (e.g., customer journey stages, ticket history, etc.). Ensure your data ingestion pipeline leads to high signal-noise ratio in data.

5. Observability and Evaluation Infrastructure

A production agent should be observable like any backend system.

- Log every decision: LLM inputs/outputs, tool calls, retrieved docs, tool outputs.

- Build dashboards: Track model performance, latency, token usage, and error rates.

- Automate evals: Use tools like PromptLayer, TruLens, or HumanLoop for regression testing and drift detection.

Security Note: Ensure no sensitive data is stored unencrypted. Log anonymized traces or redact PII.

6. Deployment Stack & Orchestration

A scalable stack typically includes:

Use containerization (Docker), load balancing (NGINX), and GPU/CPU autoscaling where required.

7. Governance, Guardrails & Role-Based Access

Production-grade Agentic AI needs control layers:

- Role-based access: Limit which agents can take action (e.g., refund, escalate).

- Guardrails: Define unsafe prompts, action boundaries, PII handling policies.

- Feedback Capture: Enable thumbs up/down or inline corrections to fine-tune system behavior.

8. Continuous Improvement Loop

Adopt an active learning loop:

- Capture user feedback and tool errors.

- Route misfires into human review or re-labeling queues.

- Retrain or fine-tune components using real-world edge cases.

- Push updates safely into production with canary rollout or gating.

Outcome: The system gets smarter over time, learning your org’s preferences, data quirks, and edge cases.

Tip: Don’t Just Ship AI, Systematize It

Deploying Agentic AI systems isn’t about shipping an intelligent assistant. It’s about building a new adaptive software layer for your organization, one that learns, reasons, and acts.

Conclusion

We’re entering a new era of enterprise AI, where systems don’t just respond, they act. Modern LLMs combined with agentic architectures are reshaping how companies automate workflows, make decisions, and scale innovation. For CTOs and technology leaders, the opportunity is clear: start building adaptive, AI-native systems that learn and evolve with your business.

The companies that move first won’t just gain efficiency, they’ll reshape their industries.

Superteams.ai helps forward-thinking organizations design, build, and deploy custom AI agents using a collaborative R&D-as-a-Service model.

👉 Get in touch to prototype your first agent and accelerate your AI transformation.