NextNeural: 100+ Pre-Built Agentic AI Workflows for Businesses

NextNeural is an API-first control layer with 100+ sovereign AI agents helping enterprises automate customer support, document processing and compliance workflows with secure data control.

Businesses are rapidly exploring AI integration across operations, yet many find themselves stalled — either in endless hiring cycles or buried under the complexity of orchestrating dozens of AI models. Existing frameworks tend to be brittle, optimized for specific vendors or platforms, and rarely address the core enterprise concern: sovereignty.

According to recent research by Linux foundation, around 79% of organisations view “sovereign AI” as a strategic priority. Further, 72% cite data control as a driver of sovereignty, while 90% see open-source infrastructure as essential to achieving it.

We launched Superteams.ai with a single goal — to bring ethical, compliant and open source-powered AI workflows to enterprises. Over the last two years, we have helped multiple organizations leverage our network of AI researchers and developers to research, prototype, and productize advanced AI systems using open source AI models.

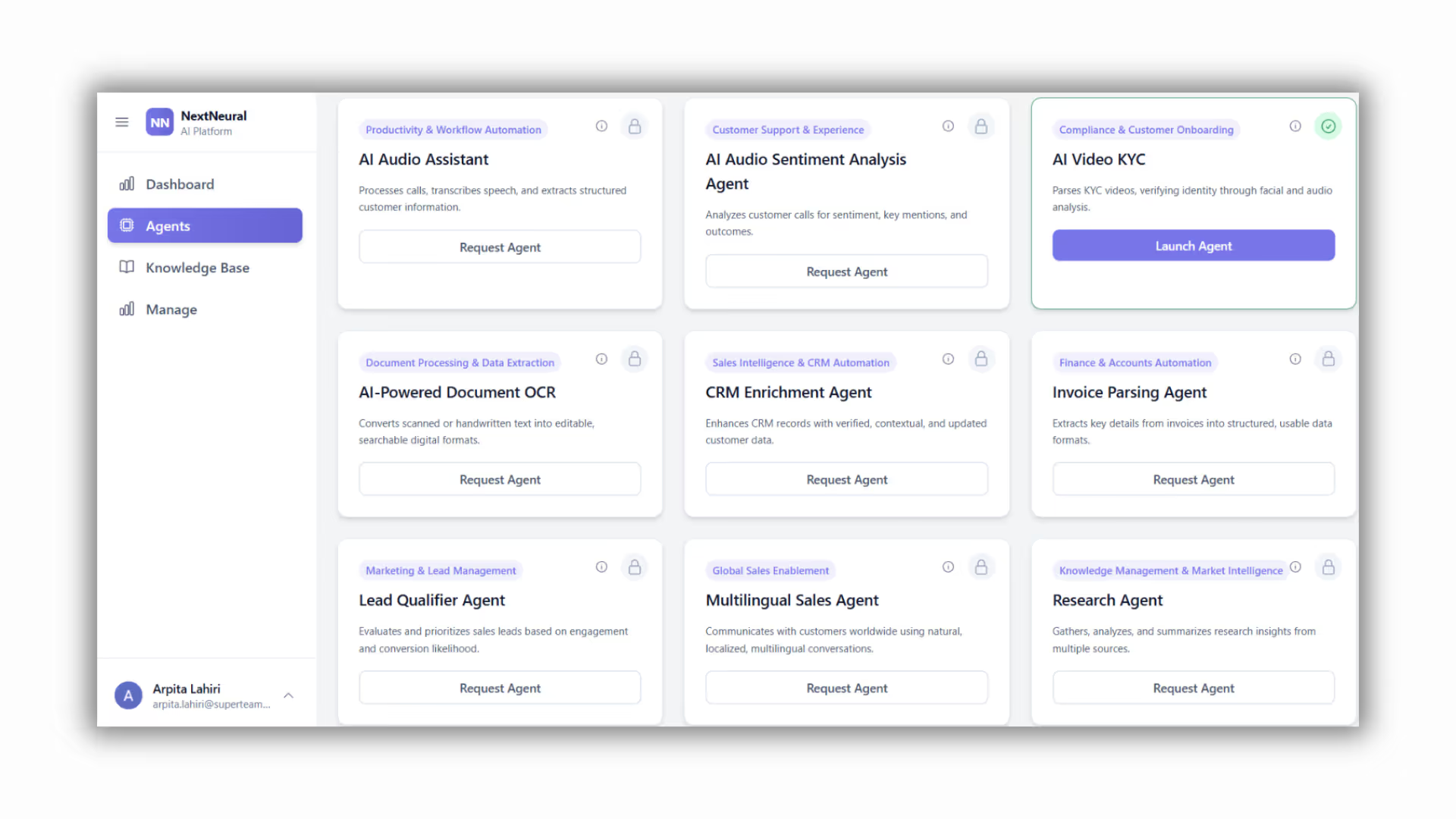

Today, we are rolling out NextNeural - an AI agent control layer to accelerate enterprise AI adoption. NextNeural enables enterprises to access over 100 prebuilt AI agents through an API-first architecture. These agents leverage open source language, vision, and audio models, allowing teams to integrate and orchestrate multimodal intelligence across workflows.

NextNeural: A Brief Overview

During enterprise deployments, we observed a consistent pattern — the same foundational workflows repeat in nearly every AI project. Each deployment, regardless of industry or model choice, required the same backbone: authentication and authorization, role-based access control (RBAC), orchestration of multiple models and agents, retrieval-augmented generation (RAG), and continuous monitoring of the underlying infrastructure.

These elements form the invisible scaffolding of every serious AI implementation. Yet, most enterprises still rebuild them from scratch — reinventing authentication layers, integrating orchestration frameworks piecemeal, and patching together observability tools. The result is slower deployment, higher cost, and fragile systems that can’t scale or comply with enterprise governance standards.

This realization shaped the foundation of NextNeural — a unified orchestration layer designed to abstract these repeated workflows and make enterprise-grade AI deployment fast, secure, and sovereign by default.

NextNeural streamlines how enterprises access, govern, and scale sovereign AI agents. It provides a unified control layer that allows organizations to deploy 100+ prebuilt agents and extend them with custom integrations, while retaining full control over their infrastructure and data.

Through its API-first architecture, enterprises can directly access and embed these pre-built agents into their existing products, workflows, and internal systems. Each agent — whether for language, vision, or audio tasks — is available via secure APIs, enabling enterprises to compose intelligent, compliant workflows without rebuilding core infrastructure.

Running on-premises or in the cloud, NextNeural makes sovereign AI practical: connected agents, governed workflows, and a foundation built for scale and control.

NextNeural Features

NextNeural comes with authorization, authentication, role-based access control (RBAC), rate limiting, and a streamlined API access layer that allows enterprises to easily connect with 100+ prebuilt agent workflows for language, vision, and audio applications.

Key Features:

- Unified Orchestration Layer - Manage and coordinate multiple AI agents and models through a single interface. NextNeural automates agent communication, dependencies, and execution pipelines across modalities.

- API-First Architecture - Every prebuilt agent and workflow is accessible via secure REST APIs, enabling seamless integration into existing systems, CRMs, and data platforms without custom engineering overhead.

- Sovereign Deployment Options - Deploy on-premises, in private cloud, or in hybrid environments while maintaining complete control over data, models, and infrastructure — critical for regulatory and compliance-sensitive industries.

- Prebuilt Agent Library - Access over 100 ready-to-use agent templates covering audio intelligence, OCR, video KYC, document intelligence, knowledge retrieval, lead generation, CRM enrichment, IVR call analytics, and more.

- RBAC and Access Governance - Fine-grained control over agent permissions and workflows for enterprise users ensures secure collaboration across teams and departments while maintaining auditability and compliance.

- Observability and Monitoring - Built-in dashboards provide real-time visibility into agent usage, cost, and infrastructure health, enabling proactive optimization and governance.

- Scalable and Modular Architecture - Designed for enterprise workloads, NextNeural scales horizontally to manage thousands of concurrent agents.

- Extensibility with Custom Integrations - Developers can extend prebuilt workflows, request integration of new models (LLMs, video, or audio), and build on top of NextNeural agents using API and SDK.

Together, these features position NextNeural as more than an orchestration tool — it’s a full-stack control layer for enterprise AI. By standardizing the way agents, models, and data interact, it eliminates the need for ad-hoc infrastructure and fragmented integrations. Enterprises can move from experimentation to production within weeks, reusing secure, prebuilt workflows while maintaining sovereignty over every layer — from model selection to data governance.

Key Agents Live on NextNeural

NextNeural ships with a growing library of 100+ prebuilt, production-ready agents designed to solve real enterprise problems across domains — from customer support to compliance, sales, and research. These agents can be directly accessed via APIs, customized with enterprise data, or combined into multi-agent workflows that mirror organizational processes.

Featured Agents include:

- Call Recording Analysis Agent

Transcribes and analyzes call recordings in real time, extracting sentiment, key topics, and compliance risks. Integrates with ASR systems like Whisper and enterprise CRMs. - Support Call Analysis Agent

Evaluates customer interactions, identifies recurring issues, and automatically generates summaries, QA insights, and escalation tags for support teams. - Video KYC Agent

Automates video-based identity verification using vision and OCR models, detecting anomalies and ensuring compliance with financial KYC regulations. - Audio Intelligence Agent

Processes audio streams for intent recognition, speaker segmentation, and emotion analysis — ideal for contact centers, voice bots, and analytics platforms. - OCR Agent

Extracts structured data from invoices, forms, and scanned documents with high accuracy, leveraging OCR and multimodal fusion pipelines. - Researcher Agent

Gathers, synthesizes, and summarizes data from internal and external sources, assisting teams with literature reviews, due diligence, or market exploration. - LLM-SEO Agent

Generates SEO-optimized content using fine-tuned LLMs, automating topic research, keyword clustering, and structured content generation at scale. - CRM Enrichment Agent

Enriches CRM entries with verified company data, contact details, and insights, connecting directly to enterprise databases or SaaS CRMs. - Sales Email Generation Agent

Crafts personalized sales and outreach emails aligned with CRM insights, tone preferences, and campaign goals — fully automatable through API triggers. - Market Research Agent

Combines web intelligence, trend detection, and language modeling to produce competitor insights, product comparisons, and strategic summaries.

…and more.

These agents form the building blocks of the enterprise AI ecosystem — modular, composable, and secure. Each one is configurable via API and can be orchestrated together through NextNeural’s control layer to create complex, domain-specific AI workflows without additional infrastructure overhead.

Underlying Architecture

At its core, NextNeural is designed for flexibility, interoperability, and sovereignty. It supports multiple deployment modes — from fully self-hosted environments to integrations with third-party inference endpoints — giving enterprises control over both infrastructure and model selection.

Model Layer:

NextNeural can run using vLLM-hosted models or connect seamlessly to external endpoints such as OpenRouter, NovitaAI, and other API gateways. It supports leading LLMs like gpt-oss-120b, LLaMA 4, Kimi K2 Thinking, DeepSeek, and any compatible open or proprietary model chosen by the enterprise. This ensures freedom from vendor lock-in while maintaining performance and cost efficiency.

Multimodal Systems:

The platform integrates automatic speech recognition (ASR) systems like Whisper for audio processing and optical character recognition (OCR) pipelines for document understanding. Together, these enable language, vision, and audio agents to collaborate across enterprise workflows.

Knowledge and Retrieval Layer:

NextNeural leverages vector search technologies (using Postgres + PGVector or Qdrant) for dense retrieval, sparse retrieval, hybrid search, and context augmentation. This enables fast and context-aware information flow between agents and underlying data sources.

Orchestration and Routing:

At runtime, select agents use agentic routing to dynamically identify the best combination of models and tools for a given task, optimizing for accuracy, latency, and cost. SQL synthesis modules allow agents to query structured enterprise data securely, turning databases into active knowledge sources.

API & Service Layer:

All components communicate via FastAPI-based APIs, ensuring low latency, asynchronous protocols and modular scalability. This API-first architecture allows internal teams to programmatically orchestrate agents, monitor execution, and integrate workflows into their own business systems.

NextNeural abstracts a complex multi-model environment into a unified, API-accessible orchestration fabric — one that combines the strengths of open-source AI, modern infrastructure, and enterprise-grade governance.

How to Get Started

Enterprises can begin using NextNeural by visiting our onboarding form to request access. Once submitted, access is typically granted within 24–48 hours following a short review by our team to verify enterprise eligibility and deployment requirements.

After approval, teams can log in to the NextNeural Control Layer and explore the library of 100+ prebuilt agents. Each agent can be deployed with a single click, enabling instant setup for functions such as document processing, customer support analytics, or CRM enrichment.

Organizations can choose to start with our cloud for testing, or proceed directly to self-managed deployment on their own infrastructure — on-premises or in a private cloud. The platform’s API-first design ensures a smooth transition between environments, allowing teams to test, customize, and scale their agent networks securely and efficiently.

Get started with NextNeural today.