Latest AI Releases - June 2025 Edition

Explore May 2025’s top AI releases: AlphaEvolve’s coding agent, INTELLECT-2’s decentralized RL, Claude 4, Devstral, FLUX.1 Kontext, Jules, NLWeb, and Mistral’s Document AI.

Since the emergence of deep learning-powered AI models, we have seen an accelerated pace of AI development. The pace of development has been such that companies have kickstarted AI R&D as a strategic imperative to remain competitive and drive innovation. May 2025 was no different.

We saw the release of a range of platform models, from Claude 4 by Anthropic, to Google’s Veo 3, a model that generates synchronized audio and video content. In the open source ecosystem, Mistral released the Devstral model, and Alibaba released the Qwen3 series of models. Simultaneously, Microsoft launched NLWeb, an open protocol to bring conversational AI to all websites across the web.

For technical leaders evaluating AI investments, this month's developments offer concrete evidence that AI is transitioning from experimental technology to core business infrastructure. The performance benchmarks, architectural innovations, and enterprise-grade features represent a maturation that makes AI adoption increasingly essential for maintaining competitive positioning.

AlphaEvolve by DeepMind: A Coding Agent for Scientific and Algorithmic Discovery

AlphaEvolve is an evolutionary coding agent developed by Google DeepMind, designed to autonomously discover and optimize algorithms across various scientific and engineering domains. By integrating large language models (LLMs), specifically Gemini Flash and Gemini Pro, with an evolutionary framework, AlphaEvolve generates, evaluates, and iteratively refines code solutions.

AlphaEvolve works by creating variations of existing algorithms, then assessing their performance using automated evaluators, and eventually selecting the most promising candidates for further evolution. The system’s architecture allows it to tackle complex problems by continuously improving upon previous iterations, leading to the development of novel and efficient algorithms.

AlphaEvolve outperformed the 56-year-old Strassen algorithm in matrix multiplication and optimized tasks like chip layout and data center scheduling. Additionally, AlphaEvolve improved the lower bound of the kissing number problem in 11 dimensions, a longstanding question in geometry. Beyond mathematical achievements, the system has optimized Google’s data center operations, chip design, and AI training processes, including enhancing the training efficiency of the very LLMs that power AlphaEvolve itself.

If you are building evolutionary systems, the AlphaEvolve paper is an essential read.

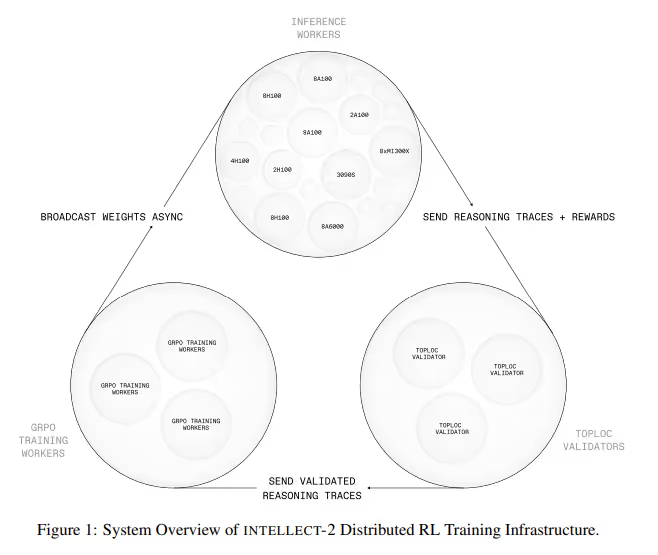

INTELLECT-2: Decentralized Reinforcement Learning at Scale

Large compute requirements have been one of the biggest challenges in large-scale AI model training. To solve this problem, the INTELLECT-2 project introduces a novel approach to scaling large language models by moving away from traditional centralized training paradigms.

Rather than relying on a fixed set of high-performance servers, INTELLECT-2, the 32 billion parameter model, was trained through a decentralized reinforcement learning framework across a permissionless and globally distributed network of compute contributors.

This model architecture tackles one of the core bottlenecks in centralized AI training: scalability limits due to compute, memory, and infrastructure constraints. The underlying design allows training to occur asynchronously across heterogeneous, and even unreliable, devices, unlocking new potential for AI development at global scale.

Can decentralized RL solve one of the most significant challenges that GPU-poor geographies face?

Claude 4 by Anthropic - for Coding, Advanced Reasoning, and AI agents

Anthropic has unveiled its latest AI models, Claude Opus 4 and Claude Sonnet 4, marking a significant advancement in the field of artificial intelligence. Claude Opus 4 stands out as Anthropic’s most powerful model to date, excelling in complex coding tasks and long-duration problem-solving. It has achieved leading scores on benchmarks like SWE-bench (72.5%) and Terminal-bench (43.2%), demonstrating its capability to handle sustained, multi-step tasks over several hours. This makes it particularly suitable for developers and researchers working on intricate projects that require continuous focus and advanced reasoning. Claude Sonnet 4, while more cost-effective, offers significant improvements over its predecessor, delivering enhanced coding and reasoning abilities with a state-of-the-art 72.7% on SWE-bench. Both models support features like extended thinking with tool use, parallel tool execution, and improved memory capabilities when granted access to local files, enabling them to extract and retain key information over time.

In addition to these models, the Anthropic API has been expanded with four new capabilities: a code execution tool, MCP connector, Files API, and prompt caching for up to one hour. These features aim to empower developers to build more powerful and efficient AI agents. Both Claude Opus 4 and Sonnet 4 are hybrid models offering near-instant responses and extended thinking for deeper reasoning, available through various plans and platforms including the Anthropic API, Amazon Bedrock, and Google Cloud’s Vertex AI. Pricing remains consistent with previous models, with Opus 4 at $15/$75 per million tokens (input/output) and Sonnet 4 at $3/$15.

Devstral by Mistral: An Agentic LLM for Software Engineering Tasks

Mistral AI, in collaboration with All Hands AI, has unveiled Devstral, an open-source agentic large language model (LLM) tailored for complex software engineering tasks. Unlike traditional LLMs that excel at isolated code completions, Devstral is designed to navigate entire codebases, perform iterative edits across multiple files, and resolve real-world GitHub issues. This capability is facilitated through integration with code agent frameworks like OpenHands and SWE-Agent, enabling Devstral to contextualize and address intricate software development challenges. Notably, Devstral achieved a 46.8% score on the SWE-Bench Verified benchmark, outperforming larger models such as Deepseek-V3-0324 and Qwen3 232B-A22B, and surpassing OpenAI's GPT-4.1-mini by over 20 percentage points.

Built upon the Mistral Small 3.1 architecture, Devstral boasts a 24-billion parameter model with a 128,000-token context window, allowing it to process extensive codebases efficiently. Its lightweight design permits local deployment on devices equipped with a single NVIDIA RTX 4090 GPU or a Mac with 32GB RAM, making it accessible for developers and organizations with stringent privacy requirements. Released under the permissive Apache 2.0 license, Devstral is available for download on platforms like Hugging Face, Ollama, and Kaggle, and can also be accessed via Mistral's API under the name devstral-small-2505 . Mistral AI has indicated that Devstral is currently a research preview, with plans underway for a more advanced version to further enhance autonomous software development capabilities.

FLUX.1 Kontext by Black Forest Labs: In-context Image Generation

Black Forest Labs has introduced FLUX.1 Kontext, a suite of generative flow matching models that enable in-context image generation and editing. Unlike traditional text-to-image models, FLUX.1 Kontext allows users to prompt with both text and images, facilitating seamless extraction and modification of visual concepts to produce coherent renderings. Key features include character consistency, local editing, style reference, and interactive speed, allowing for iterative, step-by-step refinements while preserving image quality and character identity .

The FLUX.1 Kontext suite comprises three models: FLUX.1 Kontext [pro], FLUX.1 Kontext [max], and FLUX.1 Kontext [dev]. The [pro] model delivers fast, iterative image editing, handling both text and reference images as inputs for targeted edits and complex scene transformations. The [max] model offers maximum performance with improved prompt adherence and typography generation, maintaining high consistency without compromising speed. The [dev] model, currently in private beta, is a lightweight 12B diffusion transformer suitable for customization and compatible with previous FLUX.1 [dev] inference code.

Jules by Google: Asynchronous AI Coding Assistant

Google has launched Jules, an asynchronous AI coding assistant now available in public beta. Unlike traditional code-completion tools, Jules operates as an autonomous agent that clones your GitHub repository into a secure Google Cloud virtual machine, comprehends the full context of your project, and executes tasks such as writing tests, fixing bugs, updating dependencies, and building new features. Powered by Gemini 2.5 Pro, Jules leverages advanced reasoning capabilities to handle complex, multi-file changes and concurrent tasks with speed and precision. Its asynchronous nature allows developers to focus on other tasks while Jules works in the background, presenting its plan, reasoning, and a diff of the changes upon completion.

Jules integrates seamlessly into existing GitHub workflows, enabling developers to prompt tasks, review and modify Jules' proposed plans, and manage all tasks from a dedicated panel. Notably, Jules offers audio changelogs, transforming recent commits into contextual summaries that developers can listen to, enhancing codebase comprehension. During the public beta phase, access to Jules is free of charge, though usage limits apply, with plans to introduce pricing as the platform matures.

NLWeb by Microsoft: Natural Language Interface for Websites

Microsoft has unveiled NLWeb, an open-source initiative aimed at simplifying the integration of natural language interfaces into websites, enabling users to interact with site content conversationally. By leveraging existing web standards like Schema.org and RSS, NLWeb allows developers to create AI-powered experiences that process user queries through language models, perform semantic searches, and generate natural responses. Each NLWeb instance functions as a Model Context Protocol (MCP) server, making website content discoverable and accessible to AI agents and other participants in the MCP ecosystem. This approach empowers web publishers to transform their sites into intelligent, interactive platforms without relying on centralized AI services.

The project, spearheaded by R.V. Guha (known for his work on RSS and Schema.org) has already seen adoption by organizations such as TripAdvisor, Shopify, and Eventbrite. NLWeb is platform-agnostic, supporting all major operating systems and allowing developers to choose components that best suit their needs, including compatibility with various models and vector databases.

Mistral's Document AI: Enterprise-Grade Document Processing

Mistral's Document AI offers enterprise-grade document processing by combining advanced Optical Character Recognition (OCR) technology with structured data extraction capabilities. This solution enables organizations to efficiently extract and understand complex text, handwriting, tables, and images from various document types, achieving over 99% accuracy across global languages. With the ability to process up to 2,000 pages per minute on a single GPU, Mistral's Document AI ensures faster processing speeds and cost-effective throughput, making it suitable for large-scale document operations. The platform's integration with Mistral’s AI tooling allows for flexible, full document lifecycle workflows, transforming document operations for true scale and intelligence.

A key component of Mistral's Document AI is its OCR model, which excels in understanding complex document elements, including interleaved imagery, mathematical expressions, tables, and advanced layouts such as LaTeX formatting. The model's multilingual capabilities enable it to parse, understand, and transcribe thousands of scripts, fonts, and languages, making it versatile for global organizations handling diverse linguistic documents. Additionally, Mistral OCR introduces the use of documents as prompts, allowing users to extract specific information and format it in structured outputs like JSON, facilitating integration into downstream processes. For organizations with stringent data privacy requirements, Mistral offers a self-hosting option, ensuring sensitive information remains secure within their infrastructure.