Newsletter Issue June 2025: Can LRMs Think?

June 13, 2025 Issue: Can AI truly reason? Apple’s new study sparks debate. This issue covers our AI intern opening, agentic AI guides, and major June drops: Claude 4, DGM, FLUX.1 and more.

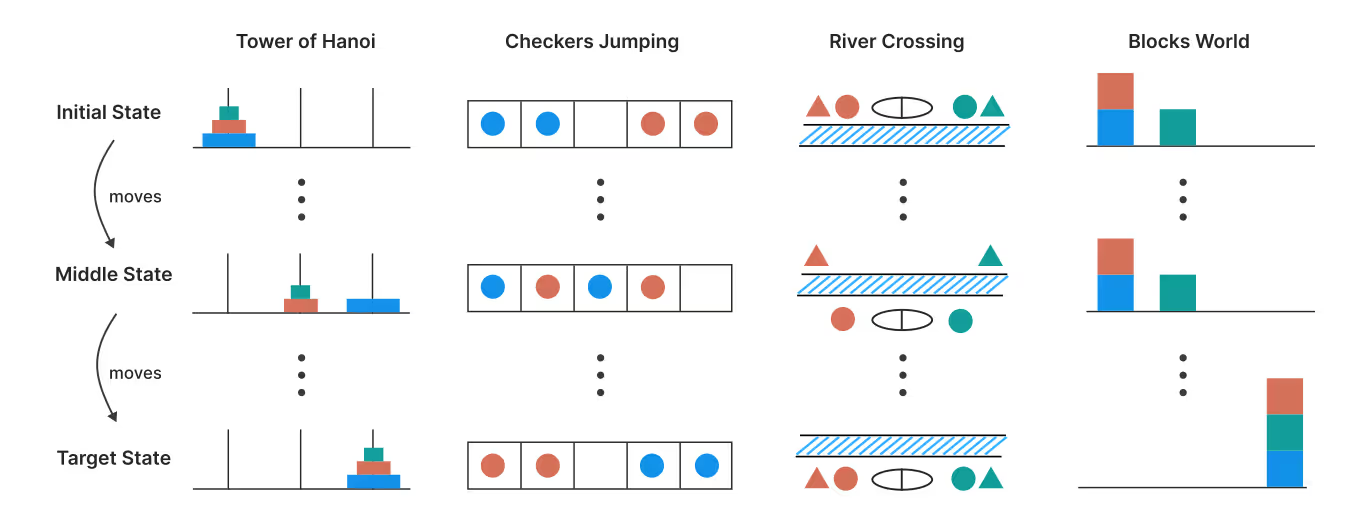

Apple’s researchers recently published a paper ‘The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity’ that has caused ripples across the AI community. The researchers designed controlled puzzle environments like Tower of Hanoi and River Crossing to assess how Large Reasoning Models (LRMs) truly ‘think’.

They found a striking “complete accuracy collapse” on complex tasks: even when provided the correct algorithm, these models either reduce their reasoning effort or fail outright. In other words, what appears as reasoning is often just clever pattern-matching stretched over multiple inference steps, not genuine logical processing.

The paper also pushes us to question the role of ‘thinking tokens’ - where the model goes into a reasoning loop before completing the task. The researchers found that across low to medium complexity puzzles, LRMs can outperform standard LLMs, but only up to a point. Once tasks cross a certain threshold, model performance collapses completely. And, as problems get harder, models begin thinning out their reasoning traces, and effectively “give up” before exploiting their full compute capacity.

Significance of Apple’s findings

Apple’s research is a fascinating read. However, rather than expecting models to “think” like humans, isn’t it more productive to leverage their ability to generate functions, code, and algorithms that solve these problems? In fact, we believe that a model’s true strength is not in replacing deep human reasoning, but in serving as a tool that can automate the construction of repeatable solutions. And in this regard, LRMs are powerful.

Most real-world challenges, like extracting structured data from messy documents, automating customer service flows, or generating simple analytics scripts, don’t require multi-step reasoning rivaling a math olympiad. What matters far more is the ability to describe a business problem and have the AI produce an executable workflow, API call, or small piece of code that reliably delivers value.

In fact, all the recent developments with tool calling and the emergence of agentic frameworks like MCP or agentic orchestration systems like Google’s A2A illustrate this shift: instead of letting the model hallucinate solutions, you guide it to assemble functions, compose calls, and build reusable logic. Even simple, “shallow” reasoning can go a long way, as long as the model can reliably create, invoke, and orchestrate functions to deliver results.

Reasoning in Real World

The question then arises, if an AI model is capable of creating algorithms, which then goes on to solve a problem (such as the Tower of Hanoi), should it be considered a reasoning model? Probably not, as we are still achieving the outcome through the model’s training and assembling code that has led to success in similar contexts in the past. An LLM may generate code for Tower of Hanoi because it has seen many examples, but it does not truly “grasp” the mathematical principles or the conceptual challenge in the way a human learner does. Therefore, it is mimicking reasoning, rather than originating it.

Yet, as we are seeing with code-trained models, the mimicry can be extremely powerful in practice. Cursor has soared to $9 billion valuation within just three years of launch , with over $500 million in annual recurring revenue, and generates almost a billion lines of code daily. This mimicry of reasoning serves developers well. For well-defined, repetitive, and pattern-rich domains like software development, this “mimicked reasoning” is often more than sufficient to deliver substantial value. It allows engineers to move faster, focus on higher-level problem-solving, and delegate much of the repetitive scaffolding to the machine.

Humans vs AI

Apple’s research underscores a crucial distinction, one we already know: while humans excel at abstract reasoning, intuition, and insight, especially when confronted with novel, high-complexity tasks, current Large Reasoning Models (LRMs) are, at best, masters of imitation and composition rather than genuine thinkers. Where humans intuitively break down new problems and adapt, LRMs are bounded by the contours of their training and tend to falter when a task strays outside the patterns they’ve learned to replicate.

This limitation has already been noted by some of the most influential thinkers in science and philosophy. Roger Penrose, for example, has long argued that human cognition is fundamentally non-algorithmic. He posits that consciousness and deep understanding arise from quantum processes in the brain that are non-algorithmic, and thus, unachievable by Turing machines.

Yet, the AI community continues to push forward on its ambition to achieve AGI, where a machine would finally be able to reason like we do. This instinct, the idea that we will eventually create a machine in our own image, is nothing new. We can trace this impulse back to stories like Pygmalion in Greek mythology, the Jewish legend of the Golem, and Mary Shelley’s Frankenstein, each reflecting a deep-rooted urge to endow our creations with life and intelligence. The development of AI today, therefore, can be seen as a continuation of this age-old quest, a search for mastery over our own nature. As historian Yuval Noah Harari writes, the desire to “upgrade” and “recreate” ourselves through technology is as much about self-understanding and transcendence as it is about utility, revealing both our creativity and our existential longing for connection and meaning.

All in all, it is a great time to be alive and be able to witness the birth of inorganic intelligence, mimicry it might be, that holds a mirror and forces us to rethink the very nature of thought, creativity, and what it means to be human.

Current Openings at Superteams.ai

AI Developer Intern

We're looking for motivated young developers eager to learn through hands-on work on projects. Apply if you have experience with: Python, Django, FastAPI, PostgreSQL, AWS, React.js, or Vue.js.

In-Depth Guides

How Can WooCommerce Stores Use Agentic AI to Improve Customer Experience?

This blog explains how WooCommerce store owners can use Agentic AI to transform customer experience through intelligent, autonomous agents.

Latest AI Releases - June 2025 Edition

Explore May 2025’s top AI releases: AlphaEvolve’s coding agent, INTELLECT-2’s decentralized RL, Claude 4, Devstral, FLUX.1 Kontext, Jules, NLWeb, and Mistral’s Document AI.

Agentic AI Guardrails: What to Know Before You Let AI Take the Wheel

This blog explains how to design oversight, alignment, and trust into agentic AI from day one.

What’s New in AI

Magistral: Mistral’s First AI Reasoning Model

Magistral is a dual-release reasoning AI. Magistral Small (24B, open-source) and Medium (enterprise) deliver transparent, multilingual, step-by-step logic, up to 10× faster than its competitors. Read the full paper.

OpenAI Launches o3-Pro, Slashes o3 Costs 80%

OpenAI drops o3-Pro, a deeper-thinking reasoning model beating rivals on PhD-level math and science benchmarks while slashing o3 pricing 80%. Pro and Team users get immediate access; Enterprise next week.

Sakana AI’s Darwin Gödel Machine

Sakana AI has unveiled the Darwin Gödel Machine (DGM), an AI system that autonomously rewrites its own code to enhance performance.It achieved a performance increase from 20% to 50% on SWE-bench and from 14.2% to 30.7% on Polyglot.

ElevenLabs launches Conversational AI 2.0, enabling fluid, real-time voice interactions with turn-taking, RAG integration, and instant language switching—bringing human-like, multilingual conversations to AI-driven customer experiences.

Black Forest Labs has introduced FLUX.1 Kontext, a suite of generative flow matching models that enable in-context image generation and editing. Key features include character consistency, local editing, style reference, and interactive speed, allowing for iterative, step-by-step refinements while preserving image quality and character identity.

About Superteams.ai

Superteams.ai acts as your extended R&D unit and AI team. We work with you to pinpoint high-impact use cases, rapidly prototype and deploy bespoke AI solutions, and upskill your in-house team so that they can own and scale the technology.

Book a Strategy Call or Contact Us to get started.