Building Accurate AI Assistants for the Banking Sector

Here, we dive into the technologies and business imperatives behind building highly accurate AI assistants for the banking sector.

Executive Summary

Accuracy in AI assistants is now a non-negotiable for banks—impacting customer trust, compliance, and operational risk. This article guides CXOs through building accurate, bank-grade AI copilots, covering essential strategies, data foundations, deployment best practices, and future trends, to help banks lead in secure, reliable, and innovative digital services.

Introduction

In banking, we’re witnessing firsthand how artificial intelligence is reshaping the industry. What began as simple, scripted chatbots has quickly evolved into powerful AI copilots that now assist teams and serve customers across every touchpoint. With this evolution, we face both tremendous opportunity and heightened risk. Today’s AI assistants are trusted with sensitive transactions, regulatory compliance, fraud detection, and complex customer needs—leaving little room for error.

The stakes couldn’t be higher. An inaccurate response from your AI—whether it’s an incorrect payment, a missed regulatory update, or a misclassified risk—can mean significant financial loss, compliance breaches, or a blow to one’s institution’s hard-earned reputation.

That’s why building truly accurate and trustworthy AI assistants is a top priority. In this article, we’ll walk you through the essential strategies and technologies you need to deliver AI that’s not just intelligent, but reliably accurate and aligned with your business, regulatory, and customer commitments.

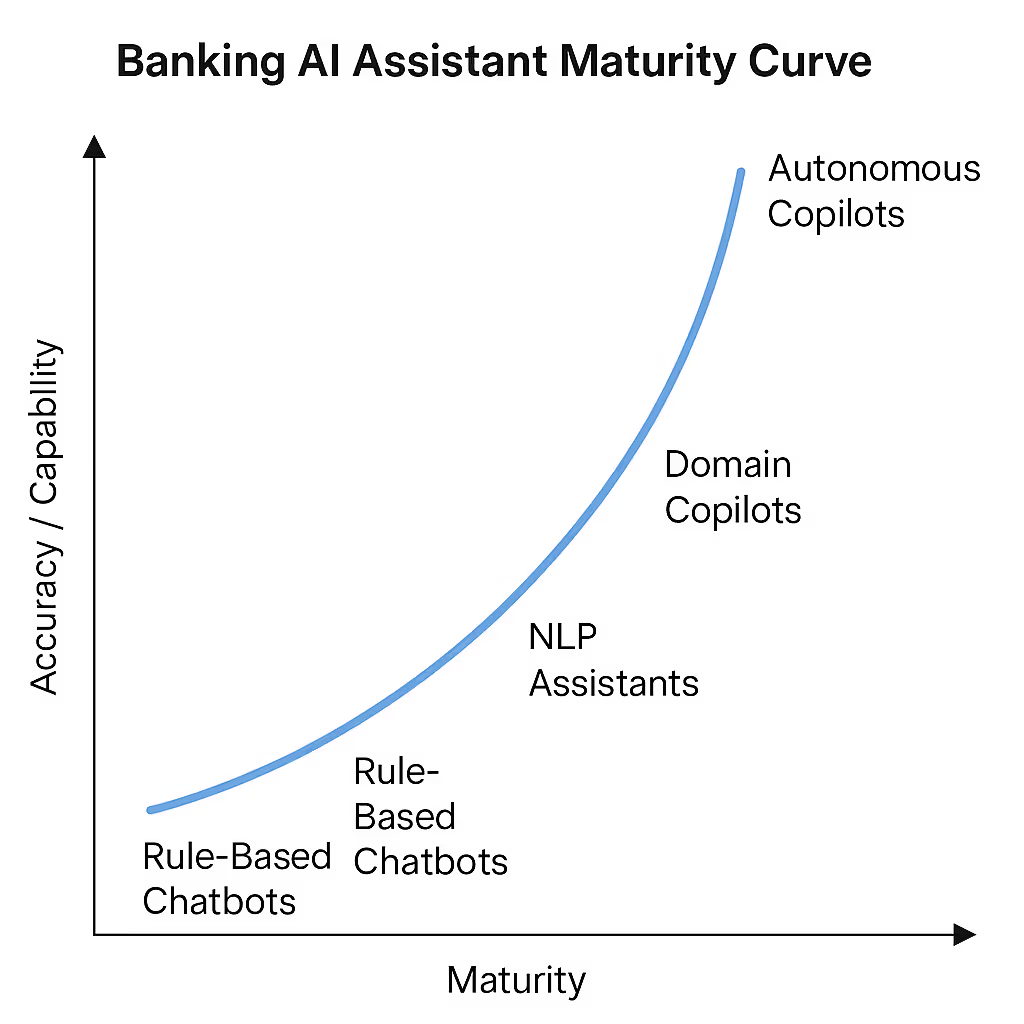

From Chatbots to Autonomous Copilots: The Maturity Curve in Banking AI

In the early days, the banking sector relied on basic, scripted chatbots—systems that could handle only the simplest FAQs, with no real understanding of intent or context. Their utility was limited, but so were the risks associated with their errors. Over time, more advanced AI assistants that leverage natural language processing, machine learning, and real-time data integration have become mainstream.

Today, we find ourselves at a turning point. AI copilots in banking can perform a wide range of tasks—from handling customer queries about loan eligibility to supporting employees with regulatory research, or even proactively identifying potential fraud. As these assistants have become more capable and embedded in one’s operations, the expectations around accuracy have escalated. What was once “good enough” in a simple chatbot is no longer sufficient when AI copilots are entrusted with high-value transactions or compliance-sensitive decisions.

Consider the following maturity curve:

- Rule-Based Chatbots: Handle scripted queries, low impact if they get it wrong.

- NLP-Powered Assistants: Can understand broader intent, but require careful tuning to avoid misinterpretation.

- Domain-Specific Copilots: Integrate with internal systems, offer personalized guidance, and must be grounded in accurate, current data.

- Autonomous Copilots: Operate proactively, make recommendations or decisions, and demand the highest levels of accuracy and explainability.

We have already seen how moving up this curve amplifies both the bank’s capabilities and responsibilities. For example, one major bank’s transition from a basic FAQ bot to a domain-specific AI copilot resulted in a dramatic reduction in call center load—but only after significant investments in accuracy, grounding, and continuous improvement. Early pilot runs exposed how even small errors—such as outdated product information—could quickly escalate into customer dissatisfaction or operational risk.

As we advance, we must recognize that accuracy is not a fixed destination. It is a moving target that becomes ever more critical as our assistants become more sophisticated and central to the business.

Insight #1:

Accuracy is not a static target. As assistants become more capable, their margin for error decreases—especially when handling high-value or regulated interactions.

Why Accuracy Matters: Case Study - India’s Microfinancing Sector

For India’s microfinance banks, accuracy in AI-powered assistants isn’t just a matter of convenience; it’s a matter of mission integrity and survival. These banks serve millions of customers, often in rural or semi-urban regions, many of whom are engaging with formal banking for the first time. Here, even a small error can undermine hard-won trust, disrupt livelihoods, and draw the attention of regulators.

Consider a scenario where an AI assistant provides a client with incorrect information about loan repayment dates or eligibility criteria for government schemes. For a micro-entrepreneur or farmer, this could mean missing a critical payment window, losing access to vital credit, or misunderstanding the terms of their loan. In these contexts, accuracy directly impacts financial inclusion and customer well-being.

Operational risk, too, is amplified in microfinance. If an AI system fails to flag unusual activity—such as potential identity fraud or repayment anomalies—it can expose the bank to significant financial losses and increase the risk of default. Moreover, regulatory bodies like the RBI hold banks to stringent standards when it comes to responsible lending, KYC (Know Your Customer), and grievance redressal. An inaccurate AI that mishandles these processes can result in not just regulatory penalties, but reputational fallout in communities where word of mouth is everything.

Investing in AI accuracy brings measurable returns: fewer complaints escalated to branch staff, more efficient disbursal and collection processes, and a tangible reduction in compliance and audit costs.

Anatomy of an Accurate Banking AI Assistant

When we talk about building accurate AI assistants for banking, we’re really describing the orchestration of several tightly integrated components. It’s not enough to have a large language model (LLM) that’s impressive in isolation; true accuracy demands careful layering of data sources, retrieval strategies, security, and auditability.

At the heart of any banking AI assistant sits a domain-adapted LLM, which forms the conversational backbone. However, relying on the LLM’s “memory” alone can lead to outdated, generic, or even hallucinated responses. That’s why we layer on retrieval mechanisms—connecting the assistant to live banking data, updated policy documents, customer records, transaction histories, and regulatory guidelines. Retrieval-Augmented Generation (RAG) architectures have become the gold standard, allowing us to ground every response in real-time, contextually relevant information.

To make this work in a production banking environment, integration is everything. We need secure, API-driven connections to core banking systems, CRM platforms, and compliance databases—all while maintaining rigorous access controls and privacy protocols. Every action and decision taken by the AI assistant must also be auditable, so we can trace how information was sourced and how decisions were made—crucial for regulatory scrutiny and internal risk management.

In practice, a modern banking AI assistant’s architecture looks like this:

- User Interface (Customers/Employees interacting via chat, web, app)

- Orchestration Layer

- Conversation Manager (routes queries)

- Security Gateway (controls access)

- LLM Core

- Domain-adapted language model

- Prompt/Context handler

- Retrieval Layer

- Connectors to:

- Core Banking Data

- Policy & Regulatory Database

- Customer Records & Transactions

- External APIs (KYC, credit score, etc.)

- Connectors to:

- Audit & Logging

- Every action and data source logged

- Real-time compliance monitoring

- Feedback Loop

- Human-in-the-loop validation

- Continuous improvement system

Insight #2:

Building a truly accurate banking AI assistant demands seamless orchestration of secure integrations, live data retrieval, and rigorous auditability at every step.

Data Sources and Grounding: Building a Reliable Foundation

For banks—whether you’re serving urban customers in a major private bank or supporting rural entrepreneurs through a microfinance institution—the accuracy of AI assistants depends directly on the diversity, quality, and governance of the data feeding them.

Take the example of a large private bank rolling out an AI copilot for loan servicing. Here, structured data includes real-time loan balances, repayment histories, and KYC records, while semi-structured sources might involve application PDFs and onboarding forms. Unstructured data comes into play through customer support call transcripts and email queries. If a customer calls to request a change in loan tenure, the AI must seamlessly pull and cross-check their most recent payment data, policy documents, and even voice notes left by the relationship manager. Any missing or outdated information could result in misadvice—leading to a regulatory complaint or a dissatisfied customer.

In microfinance, the challenges are amplified by the realities of rural banking. Data is often spread across digital ledgers, physical forms scanned into the system, WhatsApp audio messages for loan approvals, and village-level transaction logs maintained by field agents. Here, retrieval-augmented generation (RAG) makes a dramatic difference: imagine an agent asking the AI for a customer’s eligibility for a government-backed subsidy. The assistant must triangulate across recent cash flow records, scanned subsidy forms, and the latest RBI circulars—surfacing an answer that is not just relevant but also fully up-to-date.

Yet, as we connect more data, governance becomes mission-critical. For instance, if a microfinance database contains duplicate borrower records or inconsistent Aadhaar numbers, the AI could recommend incorrect loan amounts or even miss early warnings of financial distress. For a large bank, a missed update in the regulatory policy feed could trigger non-compliance with new RBI lending norms.

Best practice means building dedicated data pipelines that filter, validate, and harmonize inputs from every channel—whether that’s field agent uploads, CRM systems, or government APIs. Periodic audits, data quality dashboards, and continuous feedback from frontline teams ensure that both the data and the AI assistant stay accurate and trustworthy, whatever the operating environment.

Insight #3:

An assistant’s accuracy is only as good as the data it’s grounded in. Clean, current, and context-rich data sources are non-negotiable.

Techniques for Ensuring and Measuring Accuracy

Banking leaders know that accuracy doesn’t happen by accident; it’s the result of a disciplined, multi-layered approach that’s embedded in the design, deployment, and monitoring of AI assistants.

Fine-Tuning and Prompt Engineering

Let’s take the example of a public sector bank deploying an AI assistant for retail banking queries in multiple regional languages. To ensure that the assistant interprets colloquial questions about government schemes or pension disbursals accurately, the team fine-tunes the underlying language model on thousands of actual customer queries, call transcripts, and policy documents in both English and local dialects. Prompt engineering comes into play when framing the assistant’s instructions—clearly specifying, for instance, that it must always cite the latest RBI circular when responding to regulatory questions.

Human-in-the-Loop (HITL)

Accuracy is further enhanced through continuous human oversight. Say, in a leading private sector bank, a human-in-the-loop framework is built into their AI-powered credit card support assistant. Customer queries flagged as “high uncertainty” or relating to disputed charges are routed to an experienced support manager, who reviews the AI’s draft response before it is delivered. Over time, these interventions become valuable new training data, helping the AI learn from edge cases and complex customer scenarios.

Automated Testing and Key Metrics

Testing accuracy is an ongoing, living process. For instance, during the rollout of a new AI copilot for loan applications at a private bank, say the team builds a synthetic testbed of thousands of potential customer scenarios, ranging from simple requests to adversarial questions designed to “trick” the system. They benchmark the assistant on metrics like factual accuracy, regulatory compliance (Did it recommend a loan product in line with RBI rules?), precision/recall, and resolution rate (Did the customer’s issue get solved without escalation?).

Monitoring Accuracy Over Time

Real-world accuracy can drift as products change, regulations evolve, or customer behavior shifts. Many banks now deploy automated dashboards that track the AI’s performance on live queries, highlighting sudden drops in accuracy or spikes in human escalations. For example, after a major update to personal loan eligibility criteria, a public sector bank’s dashboard quickly flagged a spike in customer confusion—prompting a rapid retraining of the assistant’s prompts and data connections.

By investing in these ongoing techniques—fine-tuning, prompt design, human feedback loops, synthetic and live testing, and real-time monitoring—we make accuracy not a one-off achievement, but a living, evolving standard.

Here’s a table of real-world accuracy metrics commonly used by leading banks to monitor and improve their AI assistants:

Deployment Architecture: From Lab to Production

Moving a banking AI assistant from the proof-of-concept stage to full-scale production is a complex undertaking, one that goes beyond just technical integration. The choices we make around deployment architecture can impact everything from data security and compliance to system latency and customer experience.

Deployment Options: Cloud, On-Premise, Hybrid

In India, we’re seeing banks take a strategic approach to deployment. Public sector banks often favor on-premise solutions, especially for critical workloads, due to regulatory requirements on data residency and control. Private banks and new-age digital banks are more likely to adopt a cloud-first or hybrid model, leveraging public cloud platforms for scalability and faster innovation while keeping sensitive data within their own secure data centers. For instance, a major private bank might run its conversational AI in the cloud, but keep customer KYC data and transaction records on-premise, synchronizing only the minimum required information for queries.

Security, Latency, and Uptime

Regardless of the model, security is paramount. Every interaction must be encrypted end-to-end, with robust authentication and authorization gates protecting access to both AI models and underlying data. We must also consider latency—customers expect instant responses, especially during peak hours or high-stress scenarios (like fund transfers). Leading banks architect for high availability with redundant servers and real-time failover capabilities, ensuring the AI assistant remains online even if a data center or cloud region experiences disruption.

Model Monitoring and Data Privacy

Once deployed, model monitoring is crucial. Banks now routinely implement real-time dashboards to track response accuracy, latency, error rates, and abnormal usage patterns. Any sign of “drift”—where the AI’s answers start to diverge from policy or reality—triggers an immediate review. Equally critical is data privacy. Role-based access, audit logs, and strict data minimization policies ensure the AI never sees more customer data than absolutely necessary, reducing both regulatory risk and the potential for breaches.

Insight #4:

Bringing AI to production in banking is as much about security, privacy, and resilience as it is about innovation—deployment choices directly shape both customer trust and regulatory success.

Regulatory & Risk Considerations

In banking, regulatory compliance isn’t a box to check; it’s a continuous responsibility that shapes every decision we make about AI deployment. Our assistants must navigate a complex web of standards and expectations: from GDPR and India’s Data Protection Act, to the Reserve Bank of India’s (RBI) stringent guidelines on data residency, KYC, and customer grievance redressal.

Compliance Landscape: GDPR, RBI, and More

GDPR demands explicit customer consent for personal data processing and the “right to be forgotten,” while RBI mandates that all banking data pertaining to Indian customers remain within national borders. When deploying AI assistants, we must ensure data flows, storage, and retrieval processes align with these mandates at every step.

Explainability, Audit Trails, and Incident Response

Regulators are increasingly asking not just for accurate outcomes, but for transparency into how those outcomes are generated. Every AI-driven interaction—whether approving a loan or flagging a suspicious transaction—should be explainable, with clear documentation of which data and logic led to a particular decision. Comprehensive audit trails are critical, helping us to reconstruct any interaction for review by internal auditors or external regulators.

We also need robust incident response plans. If the AI assistant provides a faulty recommendation or exposes sensitive data, we must be able to investigate the root cause quickly, notify the appropriate stakeholders, and take corrective action in line with regulatory requirements. This includes everything from customer notification to reporting the breach to authorities within defined timelines.

Managing AI Risk: Scenario Testing and Audits

Proactive risk management means subjecting AI systems to regular scenario testing and stress audits. This could involve simulating rare but high-impact events—such as a regulatory change, a sudden spike in transaction volume, or adversarial attempts to manipulate the system. Regular, independent audits can help us identify and close compliance gaps before they result in real-world penalties.

In short, regulatory and risk considerations must be baked into the design, deployment, and monitoring of banking AI from day one—ensuring that innovation never comes at the expense of compliance or customer trust.

Future Trends in Banking AI Assistants

As we look ahead, it’s clear that the evolution of AI assistants in banking is far from over. New technologies and customer expectations are already reshaping what’s possible—and raising new opportunities and challenges for leaders.

- GenAI Copilots and Autonomous Decisioning

We are now seeing the emergence of next-generation AI copilots powered by advanced generative AI. These systems can synthesize complex information, offer proactive recommendations, and even execute routine transactions autonomously. Imagine an AI that not only notifies a customer of a missed payment but also suggests a personalized repayment plan, drafts a compliant email, and schedules a branch appointment: all in a single, context-aware interaction. As autonomy increases, so does the need for robust governance, guardrails, and human-in-the-loop oversight to avoid unintentional risks or biases.

- Voice-First and Multimodal Banking

The next wave of digital banking is becoming voice-first and multimodal. With more customers, especially in rural and semi-urban India, accessing services via smartphones and voice assistants, banks are investing in AI that can understand, process, and respond in local languages and dialects, across voice, chat, and visual interfaces. The ability to handle queries using voice, text, and even images (for document verification or cheque processing) is redefining accessibility and inclusivity in banking.

- The Rise of Fully Autonomous Banking Copilots

We’re approaching an era where AI copilots could handle entire processes—from onboarding to fraud detection—without routine human intervention. While this promises unprecedented efficiency and reach, it also brings new governance challenges. How do we ensure explainability, fairness, and compliance when decisions are made by self-learning systems?

- Paradigm Shifts in Customer Experience and Risk Management

The greatest impact may be in customer experience and risk. AI copilots that understand context, intent, and emotion can deliver hyper-personalized banking—anticipating needs, preventing problems, and making banking truly proactive. At the same time, advanced AI brings new risk vectors: model drift, adversarial attacks, and regulatory scrutiny. Our role will be to ensure these innovations deliver value while protecting customer interests and the integrity of the financial system.

Vendor/Build Decision: When to Partner vs. Build In-House

As we shape AI strategy, one of the most consequential choices is whether to build banking AI assistants in-house or partner with an external technology expert. There’s no universal answer—the decision depends on existing capabilities, the criticality of data control, and speed-to-market requirements.

Key Criteria for the Decision

- Capability: Does the internal team have the depth of AI, NLP, and domain expertise to design, deploy, and continuously improve a banking-grade AI solution?

- Intellectual Property (IP): Is it essential to own and control the AI models and workflows, or can the business benefit from leveraging trusted third-party solutions?

- Data Sensitivity: Do regulatory or reputational concerns require all data to be managed in-house, or can the bank design secure, compliant data flows with partners?

- Speed to Market: How urgent is the need to launch new AI-powered customer or employee services?

- Scalability and Maintenance: Is the banking institution equipped for ongoing upgrades, retraining, and compliance, or does a partner offer a proven path?

Pros and Cons for Different Bank Profiles

Large banks with significant IT investment may favor in-house builds for control and customization but should be prepared for higher upfront costs and a longer ramp-up. For mid-size, regional, or microfinance banks, the balance often tips toward partnering, as it ensures access to cutting-edge AI without overextending internal resources.

Hybrid Models and Strategic Partnerships

Increasingly, we see successful banks blending both approaches. For example, a bank might use a partner like Superteams.ai for the core AI orchestration and rapid deployment, while customizing data integrations, regulatory logic, or customer experience layers in-house. Superteams.ai can also support banks that want to future-proof their teams, offering co-development models and knowledge transfer.

Whatever the approach, the key is to design flexible partnership models, ensure auditability and transparency, and retain oversight as regulatory expectations and technologies evolve.

Insight #5:

Partnering with experts like Superteams.ai equips banks to accelerate AI adoption, reduce risk, and stay compliant—while maintaining the flexibility and oversight needed to deliver secure, innovative solutions.

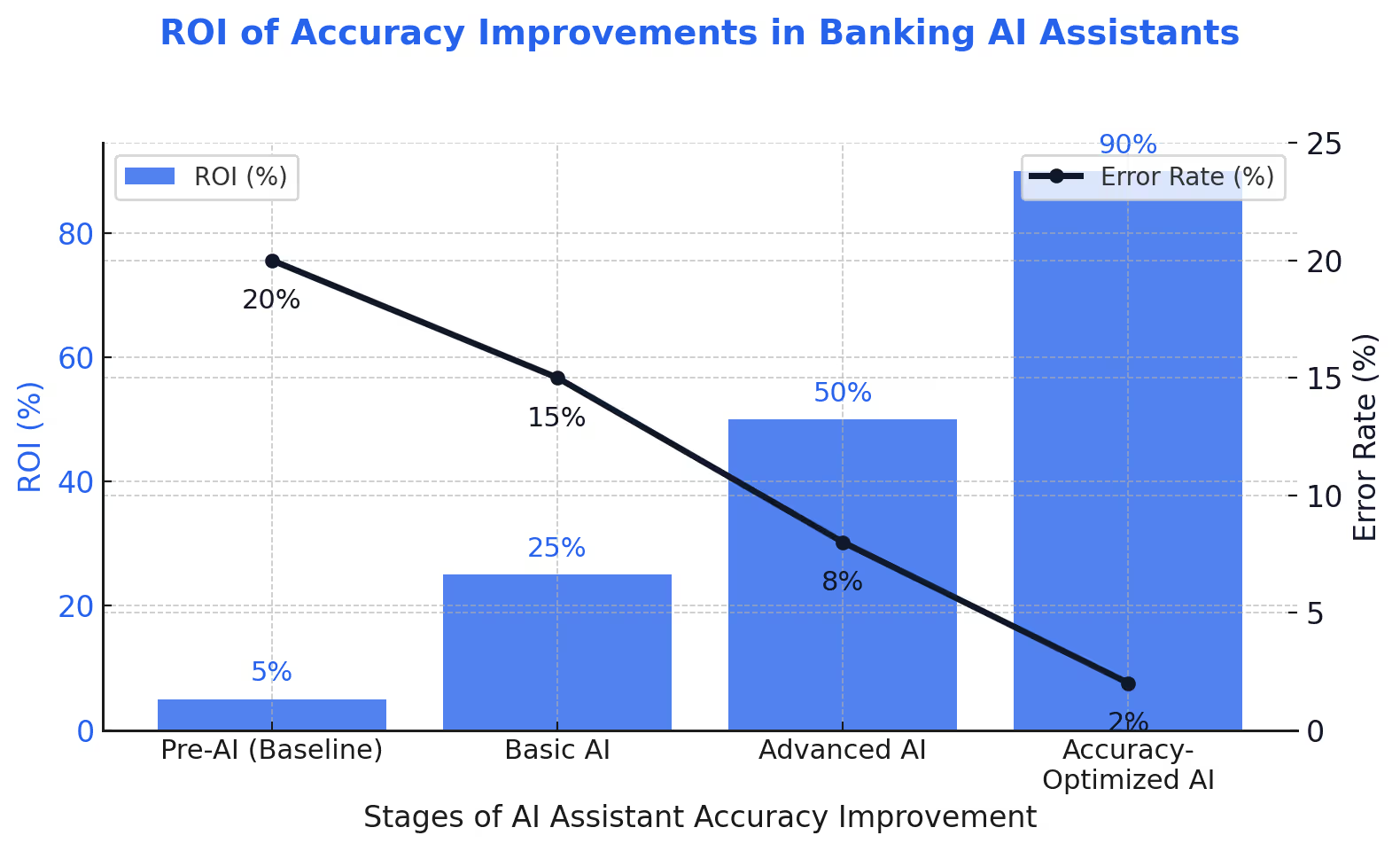

Cost/ROI Analysis: Quantifying the Value of Accuracy

When we invest in the accuracy of AI assistants, we’re unlocking measurable business value.

Methods to Calculate ROI

We start by comparing the costs of building, integrating, and maintaining a highly accurate AI assistant with the tangible returns. Direct benefits include a reduction in costly errors (such as misrouted payments or compliance breaches), fewer escalations to human staff, and measurable improvements in customer satisfaction. Indirect benefits—often just as valuable—include higher Net Promoter Scores (NPS), greater customer loyalty, and a lower risk of regulatory fines.

A typical calculation might look like this:

- Cost of Inaccuracy: Estimate historical costs of errors (e.g., compensation for mistakes, lost customers, regulatory penalties).

- Accuracy Investment: Total spend on AI model improvements, data quality initiatives, and staff training.

- Benefit Realization: Track the decline in error rates, customer complaints, and compliance issues after the AI upgrade, as well as uplift in NPS and digital engagement.

- Payback Period: Calculate how quickly the investment in accuracy “pays for itself” through these savings and revenue boosts.

Industry Benchmarks

Industry benchmarks show that leaders typically achieve:

- 40–60% reduction in customer service escalations.

- 30%+ improvement in compliance incident resolution speed.

- Uplift of 10–20 points in customer satisfaction scores within a year of launching an advanced, accuracy-focused AI assistant.

Regularly benchmarking one’s own metrics against the above will ensure we stay competitive—and provides a roadmap for where to focus next.

Conclusion

As we’ve seen, accuracy is the new baseline for trust, resilience, and innovation in banking AI. Every advancement, from smarter assistants to autonomous copilots, is only as valuable as the accuracy and reliability we build into these systems.

Now is the time for banking leaders to take a proactive role in this transformation. By prioritizing investment in data quality, robust architectures, and continuous monitoring, they can set the stage for AI copilots that are not only intelligent, but truly bank-grade—trusted by customers, endorsed by regulators, and celebrated by teams.